So, you have heard about Azure Hybrid Benefit and wondered what it means. Well, you have come to the right place, because I am going to tell all about it. Let’s get started…

What is Azure Hybrid Benefit?

It is a benefit that allows you to take your already owned Windows and SQL Server licenses with you to the cloud. As a result, you can save some big dollars on the services you use. The alternative to this is pay-as-you-go which means the licensing cost is baked into the product and you will pay the full amount.

I am no expert on Windows licensing, so I am going to leave that one alone and just focus on SQL licensing for the purpose of this post. However, if you need to know more about how your Windows licenses are impacted, you check the documentation here.

What are the limitations?

As you can probably guess, there are some limitations to be able to be able to use this. I think its best to look at this first, so you have an idea whether you qualify.

- You MUST have software assurance on your licenses, and it must be maintained. You need to have it now and you must keep it and if at any point you drop software assurance on those licenses then you do lose this benefit.

- This option is exclusive to Azure. There are options to continue to use your licenses with other cloud providers as well, so all is not lost, but you need to understand their terms. Click here to learn more about SQL licensing in AWS and here for Google Cloud.

- It cannot be applied retroactively. Basically, if you have a service already running that qualifies for this, but did not apply it, you are not entitled to any refund or adjustment. The savings only start at the time you implement it.

What are the benefits?

The main and most notable benefit is it allows you transfer your licenses to the cloud saving you money, which is pretty awesome, but there are some other benefits it also provides.

- Security updates for SQL Server 2008/2008 R2 which went out of support last year. If you are stuck on this version on-prem then you are out of support unless you paid for the very expensive extended support. Moving to an Azure VM in the cloud provides you an opportunity to work through getting upgraded without having a security risk.

- It provides you with a 180 day grace period to complete your migration. This means that you can continue to use the same license on your on-prem server and cloud service at the same time. Note though, you can only do this for purposes of the migration though, so no sneaky stuff here.

- Combined with reserved pricing you can really get some whopping savings. Reserved pricing is you making a commitment for service use for a designated period of time. The more years you commit, the higher the savings.

How much can I really save?

Really glad you asked, because guess what? Azure has a couple of calculators that can help you determine that.

The first one is the Azure Hybrid Benefit Savings Calculator. If you do not see it immediately when you go to the link, just scroll down, its trying to hide. With this calculator you can plug in your build and see your savings and is specific to calculating Azure Hybrid Benefit savings.

The other option is to use the Azure Pricing Calculator. This one is not exclusive to Azure Hybrid Benefit but may be a tool you are already using to estimate the cost of your migration (or at least you should be). To see the savings with this tool, just add an eligible service and check the box to include Azure Hybrid Benefit. The Azure Pricing Calculator is also the tool you can use to understand your savings with Reserved Pricing.

How is licensing different in the cloud then on-premise?

The same rules that exist on-prem for SQL licensing also exist in the cloud unless noted. If you are unsure what to look out for with licensing or want to evaluate your build, you can plug into my licensing calculator script to check.

One notable gotcha that is easy to overlook is the licensing minimum which is 4 cores (2 licenses). You can build services that use less cores then this, however, keep in mind that you are paying for licenses you are not using. If you need less, consider using elastic pools, provisioning by DTU with Azure SQL Database, or some other combination method.

In terms of how your SQL licenses translate, here are a few considerations to be aware of:

- 1 Enterprise license (2 Cores) = 4 Standard

licenses (8 cores) for general purpose service tiers (x4 multiplier). Standard

licenses are 1:1

- MSRP Standard Edition licenses are 26% of the total cost of Enterprise, so Microsoft is giving you a little bit of bargain that can save you a few dollars here should you choose to transfer these.

- 1 Enterprise license (2 Cores) = 1 Enterprise licenses (2 Cores). You must use Enterprise edition for anything that uses the Business Critical service tier.

How do I use it?

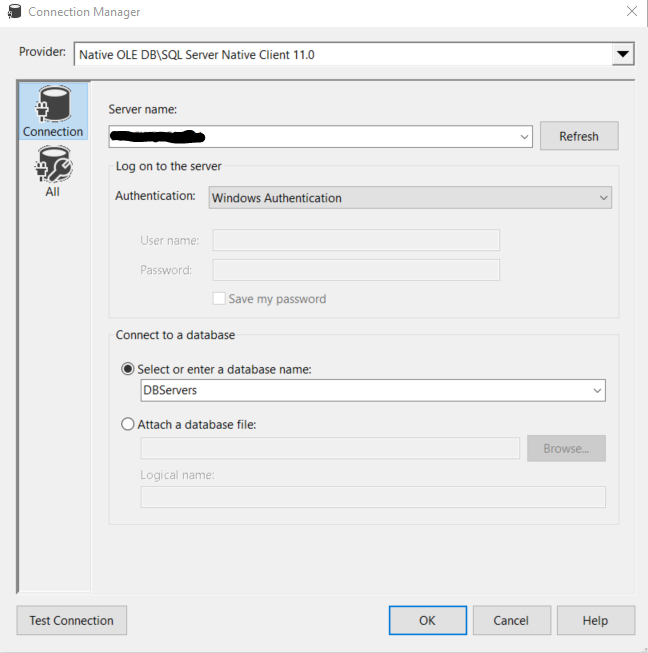

Its pretty easy actually. Whenever you go to build an eligible service in Azure, just check the box yes when it asks if you have already have licenses that you own that you plan to use.

Does it make sense to purchase licenses instead of using the baked in licensing cost?

This is a really interesting question. If you are thinking of moving to Azure and you do not have software assurance or licenses available to be transferred, should you buy the license or use the pay-as-you-go option. Spoiler alert, it probably depends.

Let takes a look at an example Azure SQL Database with the following specs:

4 Core, Gen 5, South Central Region, General Purpose Service Tier, Standard Edition

With this example and using the Azure Hybrid Benefit Calculator, I see that I can accrue a savings today of $292 a month using Azure Hybrid Benefit (or a 35.4% overall savings). Or another way of looking at this is without Azure Hybrid Benefit I am paying $292 a month more for pay as you go licensing. Looking at an entire year that would be $3,504 so lets see how this compares to buying the license instead.

Because we are using MSRP Azure pricing, I will do the same with the SQL Standard licenses. Today if I buy 2 Standard Edition Licenses (which is what I need for this), it will cost me $7,436. This is the cost to just get the license though and does not include my cost for software assurance which will be needed to use this in Azure. As a starting point, I recommend factoring in 30% of the total cost for this which translates to roughly $2,231 dollars per year. So, your first year investment would be around $9,667 and then an additional $2,231 each year after to maintain SA.

Let’s look at a year over year total investment using both methods:

| Pay as you go | Buy your own | |

| Year 1 | $3,504 | $9,667 |

| Year 2 | $7,008 | $11,898 |

| Year 3 | $10,512 | $14,129 |

| Year 4 | $14,016 | $16,360 |

| Year 5 | $17,520 | $18,591 |

| Year 6 | $21,024 | $20,822 |

Using these numbers, it would take you 6 years before buying your own license would start to save you money. And Azure changes so often that even by the point so many factors could have changed that pay as you go still ends up the cheaper option. Looking at it another way is it would take over 2 years on the pay-as you-go license before you met the initial cost of the Standard edition licenses (excluding SA) as a basis if you are paying less. Before deciding here though I would recommend factoring in your own licensing numbers to do a comparison before making a decision though since it can vary based on agreements.

Also, in addition to cost factors you may want to consider purchasing your own license if you think there is a chance you may need to reuse it down the road such as migrating out of the cloud or if you are using the cloud a temporary stop gap for something. With a pay-as-you-go option the license is baked into the service you are running so you cannot use that for anything else.

As far as the test, I performed this using multiple scenarios on both Azure SQL DB and Managed Instance and the results where the same since the licensing cost per core stayed the same, I was just dealing with higher the numbers the higher I went.

Other Suggestions:

Whenever you are looking at pricing information with a cloud provider online, remember that you are looking at MSRP pricing. There may be other opportunities to further your savings, so I always highly recommend working with your Cloud representatives and/or Microsoft.

Wrap-up

Azure Hybrid Benefit is a great way to utilize your already purchased licenses for Azure and save yourself a lot of money in the process. Remember though that in order to use this benefit you must have and maintain software assurance.

Hopefully, this post has been helpful. If you have any questions add a comment or send me a message and happy to help! For more information, you can also review the Microsoft documentation.